Probabilistic Task-Adaptive Graph Rewiring

Abstract

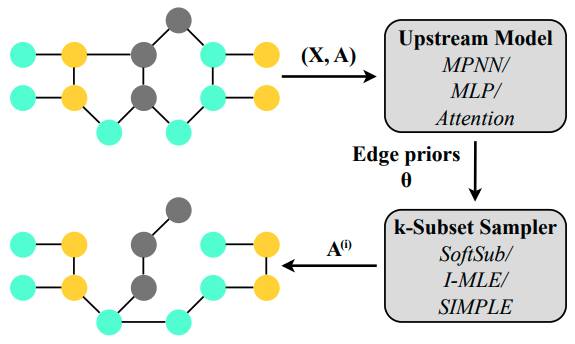

Message-passing graph neural networks (MPNNs) emerged as powerful tools for processing graph-structured input. However, they operate on a fixed graph structure, ignoring potential noise and missing information. In addition, due to their purely local aggregation mechanism, they are susceptible to phenomena such as over-smoothing, over-squashing, or under-reaching. Hence, devising principled approaches for learning to focus on graph structure relevant to the given prediction task remains an open challenge. In this work, leveraging recent progress in differentiable -subset sampling, we devise a novel task-adaptive graph rewiring approach, which learns to add relevant edges while omitting less beneficial ones. We empirically demonstrate on synthetic datasets that our approach effectively alleviates the issues of over-squashing and under-reaching. In addition, on established real-world datasets, we demonstrate that our method is competitive or superior to conventional MPNN models and graph transformer architectures regarding predictive performance and computational efficiency.